Getting Started with Docker: A Beginner’s Guide

Blog Team

What is Docker?

Docker is a platform designed to help you build, test, and deploy applications quickly and reliably. It uses containerization, a lightweight form of virtualization that allows developers to package up applications with all the parts they need (such as libraries and dependencies) and ship them as one package. Containerization is a key concept in Docker, as it ensures that the application runs the same, regardless of the environment, by isolating the application and its dependencies from the underlying system.

For instance, if you’re deploying code in development, testing, or production, Docker guarantees the same environment, minimizing issues such as different operating systems, library versions, or system configurations that often lead to the frustrating 'it works on my machine' problem. At UpTeam, this consistency across environments is really important to keep our projects running smoothly and efficiently.

Why is Docker Knowledge Crucial for Modern Tech Jobs?

Most tech roles involve building, deploying, or maintaining scalable, cloud-based applications. At UpTeam, Docker allows us to create isolated environments for testing and development, speeding up deployment and reducing the risk of system conflicts. Here’s why Docker knowledge can help you:

Simplifies Development Workflows - Docker empowers developers by enabling them to work in isolated containers, giving them control to replicate production environments on their machines. This is especially important when collaborating on multiple projects, instilling a sense of confidence in the work being done.

Scalability & Cloud Integration - Many of our projects run in the cloud, and Docker is a great tool for creating scalable environments, especially when paired with orchestration tools like Kubernetes.

Faster Deployment - Our CI/CD pipelines rely heavily on Docker, which allows for rapid deployment of features and bug fixes. Docker plays a crucial role in our continuous integration and continuous deployment processes, ensuring that new code is tested and deployed in a consistent environment. Knowing how Docker works is essential for keeping up with fast development cycles.

Cross-Team Collaboration - Docker enables a shared platform for developers, testers, and operations team members, improving collaboration and making the team feel more connected and unified.

Why Docker Matters at UpTeam

At UpTeam, Docker is a fundamental tool in many projects. It’s for everyone - developers, DevOps engineers, and QA professionals. It simplifies the process of developing, shipping, and running applications by using containers. Most of our open positions require Docker knowledge, making it essential to understand its basics before applying. This guide will help you prepare and feel more competitive in your job search.

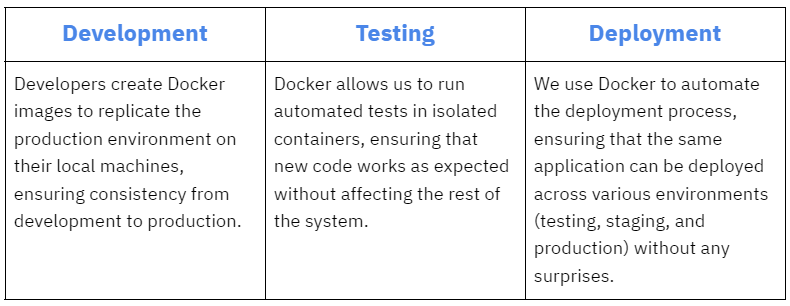

How We Use Docker

At UpTeam, Docker is integral to our development pipeline. Here are a few key areas where it comes into play:

Check out our current open positions here to see how Docker fits into various roles.

Now, let’s see how to take your understanding of Docker to the next level.

Start with the Basics

Before diving into advanced concepts, it’s essential to grasp Docker’s core features. The official Docker documentation provides a thorough introduction to Docker’s fundamental tools and ideas.

Key topics to explore:

- What is Docker? Understand the concept of containerization and how Docker enables you to package and deploy applications in isolated containers.

- How to Install Docker: The installation guide walks you through setting up Docker on your local machine, whether on Windows, macOS, or Linux.

- Create and Run Your First Container: The getting started tutorial will help you run your first Docker container. This includes using Docker Hub to pull pre-built images and running a simple containerized application.

To build a strong foundation, you can dig through the official documentation and follow the Getting Started with Docker guide.

Deep Dive into Docker

Now that you've learned the basics, it's time to explore Docker's more advanced features and real-world applications. This section will cover building custom Docker images, managing multi-container applications with Docker Compose, handling networking, and preparing Docker for production environments.

Building and Managing Docker Images

One of the most powerful features of Docker is the ability to create custom images. Docker images are lightweight, stand-alone, and executable packages that include everything needed to run a piece of software, from the code itself to runtime, libraries, and environment variables. Let’s break down how to create and manage your Docker images.

- Writing a Dockerfile

A Dockerfile is a script containing a series of instructions Docker will follow to build an image. It defines how the image is created, the software installed, and any configurations needed. Here’s a basic example of a Dockerfile for a Node.js application:

# Use the official Node.js image as the base

FROM node:14

# Set the working directory inside the container

WORKDIR /app

# Copy package.json and package-lock.json to the container

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application’s code

COPY . .

# Specify the command to run your application

CMD ["node", "app.js"]

# Expose the port the app will run on

EXPOSE 8080

This Dockerfile starts with an official Node.js image and customizes it by adding your application’s code and dependencies. Once you've written your Dockerfile, you can build the image with the following command:

docker build -t my-node-app .

- Managing Docker Images

Once you've built your image, you can list, tag, and push it to DockerHub for others to use. To list all images on your machine:

docker images

To tag your image (for example, with a version number or environment):

docker tag my-node-app my-dockerhub-username/my-node-app:v1.0

Finally, push your image to DockerHub:

docker push my-dockerhub-username/my-node-app:v1.0

Docker Compose: Managing Multi-Container Applications

Most real-world applications are more complex than a single container. You might need to run a web server, database, and other services together. Docker Compose allows you to define and manage these multi-container applications in a simple YAML file, making it easy to bring up all your services at once.

- Writing a Docker Compose File

Here's an example of adocker-compose.ymlfile for a web application that depends on a PostgreSQL database:

version: '3'

services:

web:

image: my-node-app

build: .

ports:

- "8080:8080"

depends_on:

- db

db:

image: postgres:13

environment:

POSTGRES_USER: user

POSTGRES_PASSWORD: password

POSTGRES_DB: myappdb

This configuration defines two services: web and db. The web service runs your custom Node.js application, while the db service runs a PostgreSQL database. The depends_on directive ensures that the database starts before the web application.

Starting a Multi-Container Application

With Docker Compose, you can easily bring up your entire stack with a single command:

docker-compose up

This command reads your docker-compose.yml file, builds the images (if necessary), and starts all the containers. You can also stop and remove the containers by running:

docker-compose down

Networking and Volumes in Docker

- Networking in Docker

Networking is a key aspect when working with multi-container applications. Docker creates a bridge network by default, which allows containers to communicate with each other. In the example above, thewebservice can access thedbservice using the namedb, as Docker automatically sets up DNS resolution between services in the same network.

You can also create custom networks using the following command:

docker network create my-network

To connect your containers to this network, modify the Docker Compose file:

services:

web:

image: my-node-app

networks:

- my-network

db:

image: postgres:13

networks:

- my-network

networks:

my-network:

driver: bridge

Volumes for Persistent Data

Docker containers are ephemeral, meaning any data stored inside the container will be lost once the container is stopped. To persist data, Docker uses volumes, which store data outside the container in the host machine’s file system.

For example, if you want to persist PostgreSQL data, you can modify your docker-compose.yml like this:

db:

image: postgres:13

volumes:

- pgdata:/var/lib/postgresql/data

volumes:

pgdata:

- This will create a named volume (

pgdata) where your database data will be stored. Even if thedbcontainer is removed, the data will persist and can be reused when the container is recreated.

Docker in Production

Docker is not just for development environments. It’s widely used in production environments, allowing you to deploy applications consistently across multiple environments. Here are some considerations for using Docker in production:

Optimizing Docker Images

Large Docker images can slow down your deployment process. Use multi-stage builds to keep your images lean by only including what’s necessary for the production environment:

# First stage - build the app

FROM node:14 as builder

WORKDIR /app

COPY . .

RUN npm install && npm run build

# Second stage - use a smaller image for production

FROM node:14-alpine

WORKDIR /app

COPY --from=builder /app/build /app

CMD ["node", "app.js"]

Security Best Practices

- Always use official images or trusted sources from DockerHub.

- Regularly update your base images to include security patches.

- Run containers as a non-root user to minimize the risk of attacks.

Orchestrating Containers with Docker Swarm or Kubernetes

You often need to scale your application across multiple servers in production environments. Docker Swarm and Kubernetes are tools for orchestrating large numbers of containers across distributed systems. While Docker Swarm is easier to set up, Kubernetes is more powerful and widely used in larger organizations.

Continue Learning and Growing with Docker

Now that you’ve got a solid foundation, it’s important to continue exploring Docker and its capabilities. Docker is a powerful tool, but like any technology, it takes time and practice to master. Here are a few ways you can keep growing your skills:

Explore Docker’s Official Resources

Docker offers an extensive set of learning materials and tutorials to help you deepen your understanding. The Docker Documentation is a great place to start. In particular, the “Docker Labs” section provides hands-on learning opportunities to practice what you've learned in real-world scenarios.

Contribute to Open Source Projects

One of the best ways to learn Docker is by contributing to open-source projects that use containers. This will give you hands-on experience with Docker in a live development environment. Many open-source projects actively use Docker for their CI/CD pipelines, development, and testing. You can find these projects on GitHub and start by checking out the “Issues” tab to see where you can help.

Practice with Personal Projects

To get comfortable with Docker, try using it in your own projects. Whether it’s a small web app, a personal blog, or a hobby project, containerizing your applications will give you valuable experience in building and deploying software using Docker.

Explore Docker Certification Programs

If you're looking to formalize your skills, Docker offers certification programs that demonstrate your proficiency in container technologies. Certifications like Docker Certified Associate (DCA) can give you an edge when applying for tech roles that require Docker expertise.

Learn Kubernetes

If you’re ready to take your container knowledge to the next level, Kubernetes is the perfect follow-up to Docker. It allows you to manage and orchestrate containerized applications at scale. We’ve also written a beginner-friendly guide to Kubernetes, which you can check out here. This will help you understand how Docker and Kubernetes work together in modern tech environments.

By mastering the basics of Docker and gradually advancing through practical projects, you’ll gain the skills needed to tackle real-world challenges and be well-prepared for roles at companies like ours.

If you're interested in working with us, be sure to check out our open positions and see how Docker knowledge can help you thrive at UpTeam. We look forward to seeing you grow in your career and hope this guide has set you on the right path!

.svg)

.svg)

.svg)